As retailers seek to untap the value of computer vision when used for product recognition, they must first overcome a fundamental challenge: getting it to work. In this blog, we explore the significant difficulties of creating a computer-vision engine sophisticated enough to address variabilities in occlusion, positioning, lighting, and minimally differentiated products.

Given its vast potential for supporting an almost unlimited number of retail use cases and quantifiable, pent-up demand to do so, it’s almost astonishing that AI-based computer vision hasn’t already become commonplace in retail environments. However, as we highlighted in our recent two-part blog series, the technical and operational difficulties of scaling computer vision are significant.

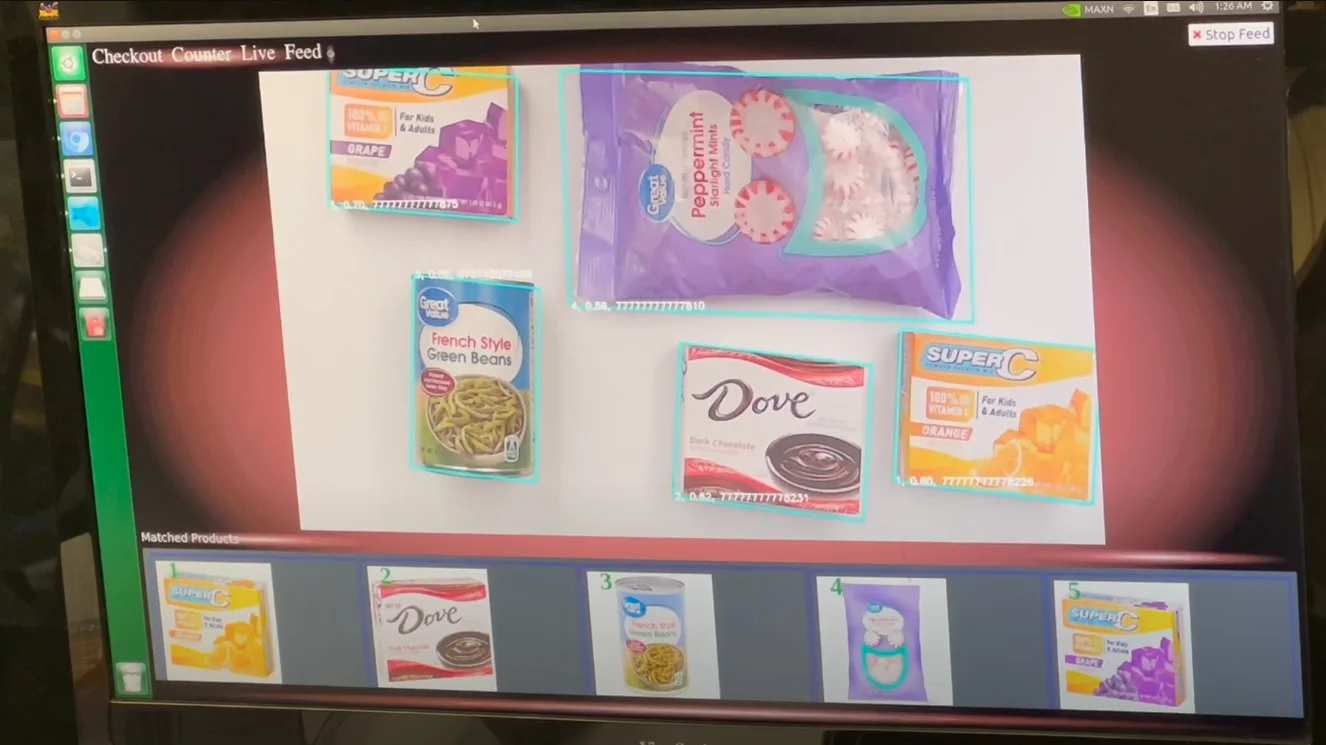

In this post, we explore the first hurdle—the complexity of achieving consistent and accurate product recognition in real-world retail environments. That qualifier, “real-world retail environments,” is a critical one. The reality is that nearly every major retailer and retail solutions provider has an AI team that has either been developing their own computer vision models, or seeking to integrate existing retail-based computer vision models into new solutions. And often, they work…but only in a lab where environmental factors are tightly controlled and managed.

Unfortunately, those same solutions that work so well in a lab environment, often struggle when faced with the conditions in actual retail stores and untrained (or at best, poorly trained) users. Let’s take a look at what happens and why.

How occlusion and positioning impact computer vision accuracy

Ideally, a computer vision system works best with full frontal, unobstructed views of its subject. But of course, real world conditions are rarely so ideal, and products are frequently piled, hidden, or obstructed, making accurate identification much more difficult. At the checkout for instance, a consumer or teller’s hands might block key information or hold a product at an unusually steep angle. On shelves, other products, crumpled packaging, or deep shelves might make accurate visibility a challenge.

Occlusion is when one object hides or blocks part of another object, and it can affect a computer vision system’s ability to detect, track, and recognize the occluded object. Products partially hidden behind others or obstructed by store fixtures may not be fully visible to the AI system, leading to inaccurate or incomplete identification. A computer vision system needs to be able to detect that an object is occluded and then either collect additional information, additional context, or use predictive models to guess what the object is.

Similarly, the same product can appear vastly different depending on its orientation, packaging, and positioning. If a computer vision model has been trained only on images of a product from a frontal view, for example, it might struggle to recognize the same product from a side or angled view.

The challenge of lighting in computer vision product recognition

In addition to the issues outlined above, product recognition can be further challenged by inconsistent or poor lighting. Consider a well-known human example: the 2015 online viral phenomenon centered around “The Dress.” Differences in lighting and how color is perceived and interpreted led to a global debate as to whether the dress in the photo was black and blue or white and gold. While computer vision systems obviously operate differently, the reality is that changes in lighting—bright or dim, yellow- or blue-hued, direct or diffused, natural or artificial, etc.—can dramatically impact the appearance of a product.

While some retailers, such as a mass-market retailer like Walmart or Target, might have greater control over and consistency in their lighting, others, such as convenience stores can have tremendous variability from store to store. Even within a brightly lit store, something as simple as changing daylight or a large promotional display can create dynamic lighting conditions or unexpected shadows that can confuse the system.

Attention to detail: Meeting the needs of fine-grained product identification

A significant challenge within the world of retail is the issue of fine-grained recognition. Consumer packaged goods manufacturers frequently use nearly identical packaging for certain classes of products, with minimal differences to indicate differences in flavor, style, or type. It’s relatively easy, for example, for computer vision to recognize the difference between a can of soup and a package of lightbulbs, for example, but distinguishing which flavor of soup, or which luminescence of lightbulb requires far greater precision. While some manufacturers maintain brand similarity but highlight such differences quite loudly in their packaging, other manufacturers represent those differences in much more subtle ways, which can create a challenge for computer vision systems that need to quickly identify the correct product. Identifying such minute differences is made even more difficult when you take into consideration the previously discussed challenges around positioning, occlusion, and lighting.

Next steps for retailers

AI developers are well aware of these issues and use a number of techniques to improve accuracy and mitigate the effects of environmental conditions. However, such solutions often spawn additional problems. One simple and commonly employed solution is simply more training—using multiple angles and pictures to try to train the model to recognize different products under different conditions. In this example, such a technique has multiple consequences. It can exponentially increase the time it takes to upload a full product catalog or to maintain and update that catalog over time. It can also lead to overtraining or “overfitting,” which makes the model less nimble and able to generalize and adapt to the variabilities of real-world scenarios.

Another way to address product recognition issues is by developing models that try to “fill in the blanks”, effectively trying to infer what the whole product looks like from seeing just a part. Such an approach, while technically more difficult to develop, can greatly expand the ability of the platform to adapt to a wide-range of store conditions without special training.

Regardless of the techniques that AI developers take to improve the accuracy of computer-vision-based product recognition, what matters are the results. As retailers consider how computer vision will be used in practice, they need to understand how product recognition accuracy will be affected by the factors we’ve outlined here. In a controlled environment, it can be very easy for computer vision engines to quickly and accurately identify products, but how does accuracy and speed change when environmental conditions change? How quickly and accurately can computer vision recognize different flavors and variations of the same product in variable conditions?

While understanding how a given computer vision engine addresses these issues is interesting academically, the important question for product managers is not really how they’ve fixed such issues, but rather whether those fixes are working at scale under various real-world conditions.